python基础三层深度学习网络

#coding:utf-8 #neural network class definition import numpy import scipy.spatial class neuralNetwork: #initialise the neural network def __init__(self,inputnodes,hiddennodes,outputnodes,learninggate): #set number of the inputnodes,hiddennodes,outputnodes self.inodes = inputnodes self.hnodes = hiddennodes self.onodes = outputnodes #link weight matrices,wih and who self.wih = numpy.random.normal(0.0,pow(self.hnodes,-0.5),(self.hnodes, self.inodes)) self.who = numpy.random.normal(0.0,pow(self.onodes,-0.5),(self.onodes, self.hnodes)) #set the learningrate self.lr = learninggate #activation function is the sigmoid function self.activation_function = lambda x:scipy.special.expit(x) pass; #trian the neural network def train(self,inputs_list,targets_list): #convert inputs to 2d array inputs = numpy.array(inputs_list,ndmin = 2).T targets = numpy.array(targets_list,ndmin = 2).T #calculate signals into hidden_layer hidden_inputs = numpy.dot(self.wih,inputs) #claculate the signals emerging from hidden layer hidden_outputs = self.activation_function(hidden_inputs) #calculate signals into final output layer final_inputs = numpy.dot(self.who,hidden_outputs) #calculate the signals emerging from final out_layer final_outputs = self.activation_function(final_inputs) #error is the (target-actual) output_errors = targets - final_outputs #hidden layer error is the output_errors, split by weights, recombined at hidden nodes hidden_errors = numpy.dot(self.who.T,output_errors) #update the weight for the links between the hidden and output layers self.who += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)),numpy.transpose(hidden_outputs)) #update the weights for the links between the input and hidden layers self.wih += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs)) pass #query the neural network def query(self,inputs_list): #convert inputs list to 2d array inputs = numpy.array(inputs_list, ndmin=2).T #calculate signals into hidden layers hidden_inputs = numpy.dot(self.wih,inputs) #calculate the signals emerging from hidden layers hidden_outputs = self.activation_function(hidden_inputs) #calculate signales into final output layers final_inputs = numpy.dot(self.who, hidden_outputs) #calculate the signals emerging from final output layer final_output = self.activation_function(final_inputs) return final_output pass

以下是使用TensorFlow优化的简单三层神经网络

#定义方法添加层数

import tensorflow as tf

def add_layer(input_x,in_size,out_size,activation_function=None):

weights=tf.Variable(tf.random_normal([in_size,out_size]))

bias=tf.Variable(tf.zeros(out_size)+0.1)

wx_plus_b=tf.matmul(input_x,weights)+bias

if activation_function is None:

outputs=wx_plus_b

else:

outputs=activation_function(wx_plus_b)

return outputs

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

x_data=np.linspace(-1,1,300)[:,np.newaxis]#增加一个维度,变成二维了

noise=np.random.normal(0,0.1,x_data.shape)

y_data=np.square(x_data)-0.5+noise

xs=tf.placeholder(tf.float32,[None,1])

ys=tf.placeholder(tf.float32,[None,1])

#############数据准备#####################

hidden_layer_out=add_layer(xs,1,10,activation_function=tf.nn.relu)

final_layer_out=add_layer(hidden_layer_out,10,1,activation_function=None)

###################模型构造########################

loss=tf.reduce_mean(tf.reduce_sum(

tf.square(y_data-final_layer_out),

reduction_indices=[1]#向右压缩维度,变成一列

))

optimizer=tf.train.GradientDescentOptimizer(0.01)

train_op=optimizer.minimize(loss)

init=tf.global_variables_initializer()

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

ax.scatter(x_data,y_data)

plt.ion()

plt.show

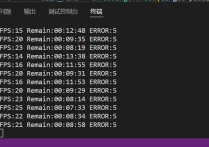

with tf.Session() as sess:

sess.run(init)

for i in range(5000):

#train

sess.run(train_op,feed_dict={xs:x_data,ys:y_data})

if i%100==0:

try:

ax.lines.remove(lines[0])#抹去上一条线

except Exception:

pass

prediction_value=sess.run(final_layer_out,feed_dict={xs:x_data})

lines=ax.plot(x_data,prediction_value,'r-',lw=3)#lw线宽为3

plt.pause(0.1)

#print(sess.run(loss,feed_dict={xs:x_data,ys:y_data}))